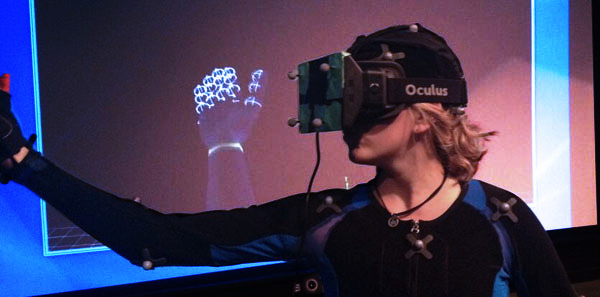

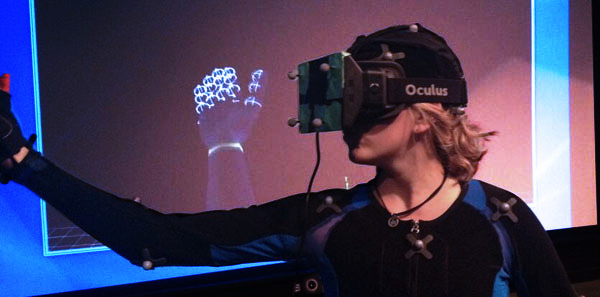

vIVE – Very Immersive Virtual Experience (Virtual Reality)

by S3D Centre

Graduation year: 2015

The initial goal of this project was to use passive optical motion capture to track an Oculus Rift to create an immersive virtual experience. The physical Oculus Rift is motion captured and in realtime with low latency the data is piped to unity to drive the virtual Oculus Rift. The output is wirelessly transmitted to the device. This results in an untethered virtual experience, which can include hands, feed or even a full body avatar.

For source code and application please visit:

www.research.ecuad.ca/projects/vive

Emily Carr University presents VIVE (Very Immersive Virtual Experience).

VIVE combines the Oculus Rift headset with motion capture systems to create a whole body virtual experience. Being able to roam and interact with the environment creates a more realistic VR experience. Emily Carr researchers are using this system to investigate modes of interaction and networked presence in VR. The project is partially supported by the SSHRC partnership project, Moving Stories, which is focused on qualitative movement analysis.

For more information on Moving Stories:

www.movingstories.ca

For more information on research at Emily Carr:

www.ecuad.ca/

www.research.ecuad.ca/s3dcentre/

www.mocap.ca/

The future goals for this project is:

- Develop opensource tools to create an immersive virtual experience

- Develop or adopt tools and protocols to allow virtual spaces to overlap

- Using observation and experimentation; explore new user experiences, interactivity and UI/UX language and protocols

The initial system was built using the following hardware and software:

- 40 Camera Vicon Motion Capture for realtime mocap

- Custom data translation code to facilitate communication between Vicon and Unity

- Unity Pro for realtime rendering

- Autodesk Maya for scene creation

- Dell workstations and NVidia Graphics Cards for data processing and rendering

- Paralinx Arrow for wireless HDMI (untethered operator)

The system has been extended to support:

- Naturalpoint (Motive) capture system

Links

Builds

- Vive Repeater 0.5 - this reads the data from Vicon or Naturalpoint and passes it on to a viewer app.

- Unity Construct 0.2(Player) - This app is a basic viewer, no oculus support – intended for a 3rd party to “see” in to the virtual environment and for debugging.

- Vive Tuscany 0.1 - Test the Oculus Rift with this app. Connects to the repeater.

Instructions

- Start Vicon or Naturalpoint Mocap system, calibrate, etc.

- Put some markers on your Oculus Rift

- Create a rigidbody in Blade or Motive

- Start Repeater, click the vicon or naturalpoint tab.

- Set the correct host ip, or use 127.0.0.1 if it’s the same machine

- Click connect

- Start the Vive Tuscany App, run in full screen.

- If Tuscany is not running on the same pc as the repeater, press “Y”, type in the IP of the machine and click connect, then press “Y” again.

- Place the Oculus in the volume, pointing towards +ve Z axis.

- Press the “1″ key

- Put oculus on your head and explore!

Basically Good Media Lab (BGML)

VIVE - Oculus Rift & Motion Capture Virtual Experience